What you can learn from Jeremy Howard from Fast.AI is that preparing the data is the crucial step in preparing a state-of-the-art Deep Learning model.

For classifying cat and dog images, it is good to rotate some of the pictures; flip some of them vertically, and even warp. This helps the model capture the essence of the images - rather than the particular orientation or shape. If some of the pictures that come to the classifier in production are shot from an unusual perspective, this will contribute to the success of your model.

Do not apply this rule (called "data augmentation") to satellite imagery - these photographs are typically only taken from one perspective, looking straight down onto the surface of the Earth.

Jeremy says that when competing on Kaggle, it is reasonable to aim at getting to the top 10% of the scores listed. As he puts it:

In my experience, all the people in the top 10% of the competition really know what they're doing. So if you get into the top 10% then that's a really good sign.

He also points to an interesting function available in modern Python called "partial". That function partially applies arguments to another function and returns the result - the result being a function with values for some parameters predefined by you. Jeremy says that creating such partially applied functions is a common thing when doing Deep Learning with the Fast.AI library.

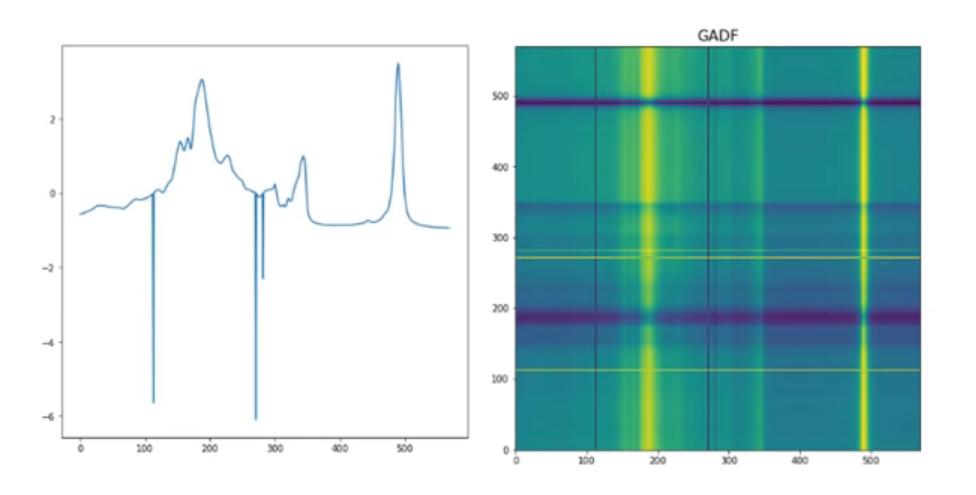

Finally, you can use image-based Machine Learning methods to classify things that are not originally images. You can for example convert time series into images and then train your model on the images.

Source: Lesson 3: Deep Learning 2019 - Data blocks; Multi-label classification; Segmentation